HGI Area Under the Curve: A Comprehensive Guide to Calculation, Applications, and Validation in Drug Development

This article provides researchers and drug development professionals with a complete guide to the Human Genetic Integration (HGI) Area Under the Curve (AUC) calculation.

HGI Area Under the Curve: A Comprehensive Guide to Calculation, Applications, and Validation in Drug Development

Abstract

This article provides researchers and drug development professionals with a complete guide to the Human Genetic Integration (HGI) Area Under the Curve (AUC) calculation. It covers foundational concepts linking genetic data to quantitative phenotypes, detailed step-by-step methodologies for HGI-AUC calculation and its role in therapeutic target prioritization. The guide addresses common analytical pitfalls and optimization techniques, and critically reviews validation standards and comparative performance against other genetic evidence metrics. The content aims to enhance the rigor and interpretation of genetic evidence in translational research pipelines.

Understanding HGI-AUC: The Foundational Bridge Between Genetics and Quantitative Traits

Defining Human Genetic Integration (HGI) and Its Role in Translational Research

Human Genetic Integration (HGI) is a systematic framework that aggregates and analyzes human genetic data—from genome-wide association studies (GWAS), rare variant analyses, and functional genomics—to directly inform and prioritize translational research pipelines. By quantifying the genetic evidence supporting a drug target's causal role in a disease, HGI mitigates the high failure rates in clinical development. This whitepaper, framed within the context of HGI-informed Area Under the Curve (AUC) calculation research, details the core principles, quantitative metrics, experimental protocols, and reagent toolkits essential for implementing HGI in translational science. The focus on AUC research underscores the application of HGI to pharmacokinetic/pharmacodynamic (PK/PD) modeling and biomarker validation.

Defining Core HGI Quantitative Metrics

HGI relies on specific quantitative metrics to evaluate genetic evidence. The following table summarizes the key data points utilized in target prioritization and validation.

Table 1: Core Quantitative Metrics for Human Genetic Integration (HGI)

| Metric | Definition | Interpretation in Translational Context |

|---|---|---|

| Genetic Association p-value | Statistical significance of variant-trait association. | Standard threshold: ( p < 5 × 10^{-8} ). Lower p-value indicates stronger association. |

| Odds Ratio (OR) / Beta Coefficient | Effect size of a risk-increasing (OR>1) or protective (OR<1) variant. | Informs on the potential magnitude of therapeutic effect modulation. |

| Variant Allele Frequency (VAF) | Frequency of the alternative allele in a given population. | Determines the population impact and feasibility for stratified trials. |

| Phenotypic Variance Explained (R²) | Proportion of trait variance attributable to a genetic variant/locus. | Estimates the potential upper limit of therapeutic efficacy. |

| Colocalization Probability (PP4) | Posterior probability that GWAS and QTL (e.g., eQTL, pQTL) signals share a single causal variant. | Strengthens causal inference linking variant, target gene, and disease. |

| Mendelian Randomization (MR) p-value | Significance from MR analysis testing causal effect of exposure (e.g., protein level) on outcome. | Provides evidence for a causal, druggable relationship (e.g., lower LDL via PCSK9). |

HGI-Informed Experimental Protocols for Translational Validation

The following protocols are critical for transitioning from a genetically-validated target to a therapeutic hypothesis, with emphasis on PK/PD (AUC) modeling.

Protocol: Colocalization Analysis for Causal Gene Identification

Objective: To determine if genetic associations for a disease trait and a molecular phenotype (e.g., gene expression) share a common causal variant, implicating specific gene regulation in disease etiology. Workflow:

- Data Curation: Obtain summary statistics for the disease GWAS and for a relevant quantitative trait locus (QTL) study (e.g., eQTL from GTEx, pQTL from plasma proteomics) for the genomic region of interest.

- Locus Definition: Define a ±500 kb window around the lead GWAS variant.

- Analysis Execution: Run a Bayesian colocalization analysis (e.g., using

colocR package). Input variant IDs, p-values, and effect sizes for both traits. - Output Interpretation: Calculate posterior probabilities (PP0-PP4). A PP4 > 80% supports a shared causal variant, strengthening the causal link between the gene's expression/protein level and the disease.

Protocol: In Vitro Functional Validation using CRISPR/Cas9 in a Relevant Cell Model

Objective: To experimentally perturb the HGI-identified target gene and measure consequent changes in pathway activity or cellular phenotypes. Workflow:

- Cell Model Selection: Select a disease-relevant cell type (e.g., iPSC-derived hepatocytes for metabolic disease, microglia for Alzheimer's).

- CRISPR Design: Design sgRNAs targeting the non-coding variant region (for regulatory studies) or exonic regions (for knockout) of the candidate gene. Include non-targeting control sgRNAs.

- Delivery & Selection: Transfect or transduce cells with Cas9/sgRNA ribonucleoprotein complexes or viral vectors. Use antibiotic selection or FACS to enrich edited cells.

- Phenotypic Assay: Perform a high-content assay (e.g., imaging of lipid accumulation, ELISA for secreted inflammatory markers, RNA-seq for pathway analysis) 5-7 days post-editing.

- Statistical Analysis: Compare phenotypes between target-edited and control cells using ANOVA with appropriate multiple testing correction.

Protocol: Integrating HGI into Preclinical PK/PD AUC Modeling

Objective: To utilize human genetic data on target modulation to parameterize preclinical PK/PD models, predicting clinically effective dose and exposure (AUC). Workflow:

- Parameter Identification: From HGI data, extract the natural human effect size (e.g., the change in disease risk per unit change in protein level or activity, derived from MR or pQTL beta coefficients).

- In Vitro to In Vivo Scaling: Establish the relationship between in vitro target engagement (TE) and functional modulation in a cellular assay.

- Model Development: Develop a compartmental PK/PD model. The PD component should incorporate the HGI-derived effect size as the maximal achievable therapeutic effect (E_max). The EC_50 is informed by in vitro TE assays.

- AUC Simulation: Simulate various dosing regimens. Calculate the plasma concentration-time curve and the resulting target modulation-time profile. The AUC of the target modulation curve is the key integrative PD metric linking exposure to total effect.

- Dose Prediction: Identify the dose that produces a PD AUC equivalent to the protective genetic effect observed in human populations.

Visualization of Core HGI Concepts and Workflows

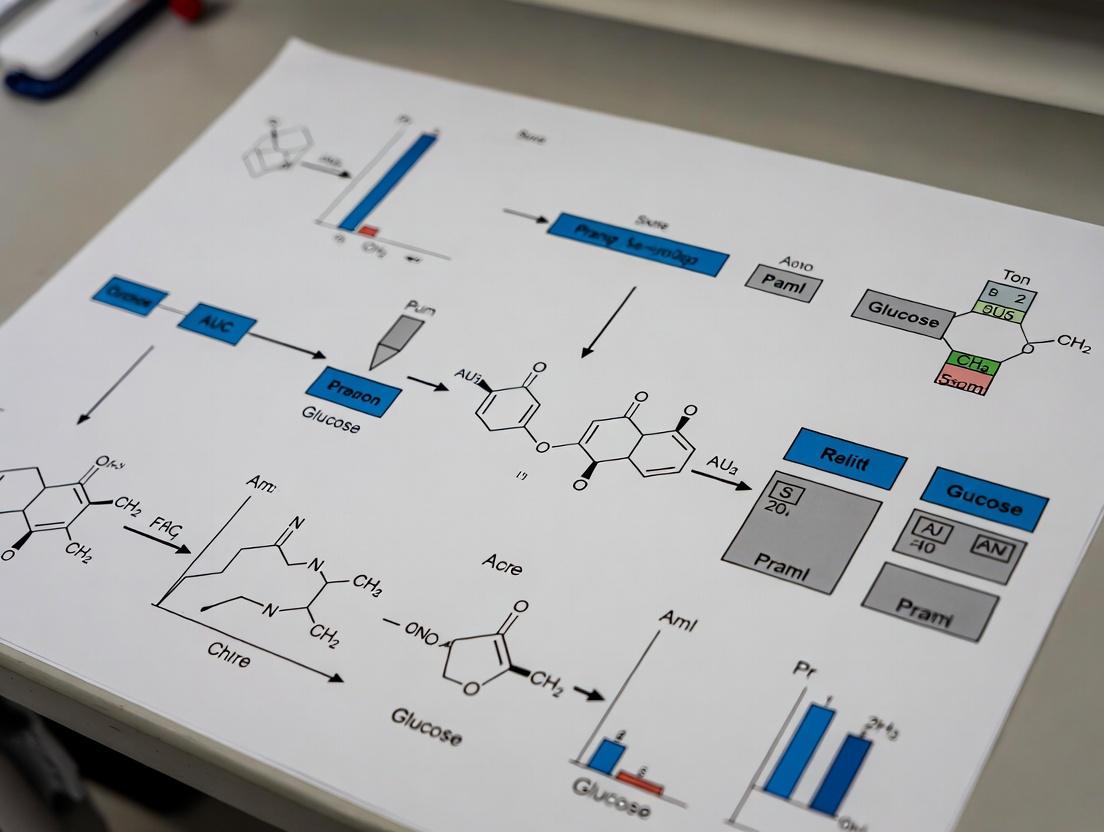

Diagram 1: HGI Translational Research Pipeline

Diagram 2: HGI Informs PK/PD AUC Modeling

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Reagents and Tools for HGI-Focused Translational Research

| Category / Item | Function & Application |

|---|---|

| CRISPR/Cas9 Editing | Function: Precise genome editing for functional validation of HGI-identified variants/genes. Application: Create isogenic cell lines with risk/protective alleles or knock out candidate genes in disease-relevant cell models (iPSCs, primary cells). |

| Induced Pluripotent Stem Cells (iPSCs) | Function: Provide a genetically tractable, disease-relevant human cellular platform. Application: Differentiate into target cell types (neurons, cardiomyocytes, hepatocytes) for functional assays and PK/PD pathway modeling. |

| Proteomics Kits (e.g., Olink, SomaScan) | Function: High-throughput, multiplexed quantification of proteins in plasma or cell supernatants. Application: Measure pQTL effects, validate protein-level changes after genetic perturbation, and identify pharmacodynamic biomarkers. |

| High-Content Imaging Systems | Function: Automated, multi-parameter cellular phenotyping. Application: Quantify complex morphological or functional changes (e.g., lipid droplets, neurite outgrowth, organelle health) in genetically edited cells for phenotypic screening. |

| PK/PD Modeling Software (e.g., NONMEM, Phoenix, R/Python) | Function: Develop and simulate mathematical models of drug disposition and effect. Application: Integrate HGI-derived parameters (effect size, natural variation) to predict human dose-response and optimize clinical trial AUC targets. |

| Bioinformatics Pipelines (coloc, TwoSampleMR) | Function: Perform statistical genetics analyses central to HGI. Application: Execute colocalization and Mendelian Randomization analyses using publicly available GWAS and QTL summary statistics to establish causal inference. |

1. Introduction & Thesis Context

This whitepaper explores the evolution and application of the Area Under the Curve (AUC) metric, tracing its path from the evaluation of diagnostic tests via Receiver Operating Characteristic (ROC) curves to its pivotal role in scoring genetic evidence in Human Genetic Initiative (HGI) research. Within the broader thesis of HGI AUC calculation research, the core challenge is to quantify the aggregate evidence for gene-phenotype associations from massive-scale genome-wide association studies (GWAS) and sequencing data. This transition from a binary classifier metric to a continuous measure of genetic signal robustness is foundational for prioritizing drug targets.

2. Core AUC Concepts: Diagnostic ROC to Genetic Scoring

2.1 The ROC-AUC Foundation ROC curves plot the true positive rate (sensitivity) against the false positive rate (1-specificity) across all possible classification thresholds. The AUC provides a single scalar value representing classifier performance: an AUC of 1.0 denotes perfect discrimination, 0.5 represents random performance.

2.2 Translating AUC to Genetic Evidence In HGI research, the "classifier" is often a statistical model or filtering pipeline separating true disease-associated variants from noise. Key adaptations include:

- Variant-Level AUC: Evaluating how well a functional score (e.g., CADD, PolyPhen) discriminates likely causal variants from benign variants.

- Gene-Level AUC: Assessing the performance of gene prioritization methods that aggregate variant signals, using known disease genes as positives.

Table 1: Evolution of AUC Interpretation Across Domains

| Domain | X-Axis | Y-Axis | AUC Interpretation | Typical Threshold for "Good" |

|---|---|---|---|---|

| Diagnostic Test | False Positive Rate | True Positive Rate | Ability to distinguish disease from healthy | >0.9 |

| Variant Prioritization | 1 - Specificity (Benign Variants) | Sensitivity (Pathogenic Variants) | Ability to identify causal genetic variants | >0.8 |

| Gene Prioritization | Fraction of Non-Disease Genes Ranked | Fraction of Known Disease Genes Ranked | Performance of gene aggregation methods | >0.7 |

3. Experimental Protocols for AUC in Genetic Studies

3.1 Protocol for Evaluating Variant Prioritization Scores

- Objective: Calculate AUC of a functional prediction score (e.g., MPC, MetaRNN).

- Positive Set: Curated pathogenic variants from ClinVar (restricted to loss-of-function or missense for relevant diseases).

- Negative Set: Frequency-matched common variants (MAF > 1%) from gnomAD presumed benign.

- Method:

- Annotate all variants in positive and negative sets with the target prediction score.

- Treat the prediction score as a ranking classifier. Vary the score threshold.

- At each threshold, calculate Sensitivity (TPR) and 1-Specificity (FPR).

- Plot ROC curve and compute AUC using the trapezoidal rule.

- Validation: Use cross-validation across different disease cohorts to avoid bias.

3.2 Protocol for Gene-Based Burden Test AUC Evaluation

- Objective: Assess the performance of a rare-variant burden test in distinguishing case/control status.

- Data: WGS/WES data from HGI consortia (e.g., UK Biobank, FinnGen).

- Gene Set: Define a "gold-standard" set of positive control genes with established disease associations.

- Method:

- For each gene, perform a burden test (e.g., SKAT-O) across all samples, yielding a p-value.

- For a range of p-value thresholds, genes surpassing threshold are "predicted positives."

- Calculate the proportion of gold-standard genes recovered (Sensitivity) vs. the proportion of all other genes called (FPR).

- Plot ROC and calculate AUC. A higher AUC indicates the burden test effectively enriches for known genes.

4. Visualization of Key Concepts and Workflows

Diagram 1: HGI Gene Prioritization & AUC Validation Workflow (83 chars)

Diagram 2: Variant-Level Functional Score AUC Calculation (73 chars)

5. The Scientist's Toolkit: Key Research Reagents & Solutions

Table 2: Essential Toolkit for HGI AUC Research

| Item / Solution | Function in AUC-Focused Research | Example / Provider |

|---|---|---|

| Curated Variant Databases | Provide gold-standard positive/negative sets for AUC benchmark calculations. | ClinVar, gnomAD, HGMD |

| Functional Prediction Algorithms | Generate variant-level scores whose discriminatory power is evaluated via AUC. | CADD, REVEL, MPC, AlphaMissense |

| Gene-Aggregation Software | Perform burden tests and generate gene-level association statistics for evaluation. | SKAT-O (in R), REGENIE, MAGMA, Hail |

| AUC Calculation Packages | Efficiently compute ROC curves and AUC with confidence intervals. | pROC (R), scikit-learn (Python, roc_auc_score), statsmodels |

| High-Performance Computing (HPC) Cluster | Enables large-scale re-computation of scores and AUC benchmarks across thousands of genes/variants. | Cloud (AWS, GCP) or on-premise SLURM cluster |

| Containerization Software | Ensures reproducibility of complex analysis pipelines for AUC validation. | Docker, Singularity |

Key Biological and Statistical Rationale for Using HGI-AUC

1. Introduction and Context

Within the broader thesis of HGI (Human Genetic Interaction) research, the calculation of the Area Under the Curve (AUC) for HGI profiles emerges as a critical quantitative metric. This whitepaper details the core biological and statistical rationales for its adoption, positioning HGI-AUC as a superior integrator of genetic interaction data for functional genomics and drug target validation. HGI maps epistatic relationships, where the phenotypic effect of one genetic variant depends on the presence of another. The AUC summarization transforms complex, multi-condition genetic interaction profiles into a single, robust statistic, enabling comparative analysis and prioritization.

2. Biological Rationale: Capturing System Perturbation Robustness

The fundamental biological premise is that genes operating within the same functional pathway or complex often show similar patterns of genetic interactions across a spectrum of query gene perturbations. A full HGI profile, generated against a panel of diverse mutant backgrounds (e.g., in yeast) or in various cellular contexts (e.g., different cancer cell lines), reflects the global "genetic neighborhood" of a gene.

- Phenotypic Breadth Over Single Endpoints: Relying on a single interaction score from one condition is biologically myopic. HGI-AUC integrates interaction strength across multiple perturbations, capturing the consistent functional relationship between gene pairs, which is more reflective of true biological pathway membership.

- Buffering and Synthetic Lethality Integration: The AUC inherently weights both positive (buffering/suppressive) and negative (aggravating/synthetic sick-lethal) interactions across all tested conditions. A gene whose knockout consistently buffers many diverse perturbations may be a central hub in a stress-response network.

- Noise Mitigation: Biological replicates and experimental noise can cause variability in single-point measurements. The AUC calculation smooths out such stochastic noise, providing a more reliable aggregate measure of genetic interaction strength.

3. Statistical Rationale: A Robust Comparative Metric

Statistically, HGI-AUC offers advantages over alternative summary statistics.

- Non-Parametric Strength: It does not assume a normal distribution of interaction scores, which is often violated in genomic data.

- Rank-Based Comparisons: When calculated from ranked interaction profiles, AUC is closely related to the Mann-Whitney U statistic, providing a probabilistic interpretation (the probability that a randomly chosen score from one profile is more extreme than from another).

- Dimensionality Reduction: It enables the reduction of a high-dimensional interaction profile (scores across N conditions) to a single, comparable scalar, facilitating large-scale analyses like clustering and genome-wide association.

4. Experimental Protocol for HGI-AUC Generation

A standard protocol for generating HGI-AUC data in a model organism (e.g., S. cerevisiae) is outlined below.

4.1. High-Throughput Genetic Interaction Mapping (SGA/E-MAP)

- Query Strain Array: Construct an array of query gene deletion mutants, each carrying a distinct molecular barcode and a common selectable marker (e.g., kanMX).

- Library Crossing: Mate the query array with a comprehensive library of "array" deletion mutants (e.g., natMX-marked) using a robotic pinning system on solid agar media.

- Diploid Selection: Transfer mated cells to medium selecting for diploids.

- Sporulation and Haploid Selection: Induce sporulation, then pin to medium selecting for haploid progeny carrying both deletion markers (e.g., G418 and nourseothricin).

- Phenotypic Data Collection: Grow double mutants on solid agar. Quantify fitness via colony size imaging (e.g., Scan-o-Matic) or barcode sequencing (Bar-seq) over time.

- Interaction Score Calculation: For each double mutant ij, compute a genetic interaction score (εij), typically as the deviation of the observed fitness (w*ij*) from the expected multiplicative model (*w*i × w*j*): ε*ij* = *w*ij - (w*i* × *w*j). Scores are generated across the entire array for each query gene.

4.2. HGI Profile Assembly and AUC Calculation

- Profile Assembly: For a specific gene of interest (the "target"), compile its vector of interaction scores (ε) with all genes in the array library. This forms its HGI profile.

- Condition Aggregation (Optional): If profiles exist across multiple conditions (e.g., different drugs, temperatures), concatenate or average scores per gene pair per condition.

- AUC Calculation (vs. a Reference Set):

- Define a positive reference set (e.g., known pathway members) and a negative set (unrelated genes).

- Rank all genes in the target's HGI profile by their interaction strength (e.g., most negative to most positive ε).

- Calculate the AUC using the trapezoidal rule, where the x-axis is the fraction of ranked genes and the y-axis is the cumulative fraction of positive reference genes found up to that rank. An AUC of 0.5 indicates random ordering, >0.5 indicates enrichment of positive references among strong interactors.

5. Data Presentation

Table 1: Comparison of HGI Summary Metrics

| Metric | Description | Biological Interpretation | Statistical Properties | Sensitivity to Noise |

|---|---|---|---|---|

| HGI-AUC | Area under the receiver operating characteristic curve for a known gene set. | Global functional similarity to a reference pathway/complex. | Non-parametric, rank-based, provides confidence intervals. | Low (integrates across ranks). |

| Mean Interaction Score | Arithmetic average of all ε scores in the profile. | Average net interaction strength. | Sensitive to extreme outliers, assumes symmetric distribution. | High. |

| Top-N Hit Count | Number of interactions beyond a significance threshold. | Measures number of strong, condition-specific interactions. | Depends heavily on arbitrary threshold selection. | Medium. |

| Profile Correlation (Pearson) | Linear correlation between two gene's full HGI profiles. | Linear relatedness of interaction patterns. | Assumes linearity and normality, sensitive to outliers. | Medium-High. |

Table 2: Exemplar HGI-AUC Values for Yeast Gene Functional Classes

| Gene (Standard Name) | Function/Complex | Reference Positive Set | Calculated HGI-AUC (vs. Neg. Set) | 95% Confidence Interval |

|---|---|---|---|---|

| CDC28 | Cyclin-dependent kinase | Cell cycle regulators | 0.89 | [0.85, 0.93] |

| SEC21 | COPI vesicle coat | ER/Golgi transport factors | 0.82 | [0.78, 0.86] |

| VMA2 | Vacuolar H+-ATPase | Vacuolar acidification | 0.91 | [0.88, 0.94] |

| YKU70 | Non-homologous end joining | DNA repair genes | 0.76 | [0.71, 0.81] |

6. Visualization of Core Concepts

HGI-AUC Generation Experimental Workflow

Biological and Statistical Rationale for HGI-AUC

7. The Scientist's Toolkit: Key Research Reagent Solutions

| Item | Function in HGI-AUC Research | Example/Supplier Note |

|---|---|---|

| Barcoded Yeast Deletion Libraries | Provides the comprehensive array of homozygous (haploid) or heterozygous (diploid) deletion mutants for crossing. Essential for scalability. | Yeast Knockout (YKO) collection (Thermo Fisher). Contains ~5000 strains with unique UPC barcodes. |

| Query Strain Collection | Arrayed set of mutants for genes of interest (e.g., drug targets, essential genes), used as the starting point for mapping interactions. | Often constructed in-house using PCR-based gene deletion. |

| Robotic Pinning Systems | Enables high-density, reproducible replication of strain arrays across agar plates for the sequential steps of SGA. | Singer Instruments ROTOR or S&P Robotics. |

| Colony Imaging & Analysis Software | Quantifies colony size (fitness proxy) from high-resolution scans of assay plates. | Scan-o-Matic (open-source) or gitter for image analysis. |

| Genetic Interaction Scoring Pipeline | Computes interaction scores (ε) from raw fitness data, correcting for plate and row/column effects. | CellProfiler, pySGA, or custom R/Python scripts. |

| HGI-AUC Calculation Package | Implements rank-ordering and AUC calculation against defined gene sets, with confidence interval estimation. | R packages pROC or AUC, or custom scripts using scikit-learn in Python. |

| Condition-Specific Perturbagens | Compounds, temperature shifts, or nutrient stresses applied during fitness assays to generate context-specific HGI profiles. | Libraries of FDA-approved drugs (e.g., Prestwick) for chemical genomics. |

Within Human Genetic Initiative (HGI) research on area under the curve (AUC) calculation for complex trait analysis, the integration of three core components—genetic variants, phenotype data, and prediction models—is fundamental. This technical guide details their synergistic role in constructing polygenic risk scores (PRS) and other predictive frameworks to quantify genetic liability and its phenotypic expression, ultimately aiming to improve translational outcomes in drug development.

Genetic Variants: The Foundational Layer

Genetic variants, primarily single nucleotide polymorphisms (SNPs), serve as the input variables for predictive models. In HGI AUC research, the focus is on genome-wide association study (GWAS)-derived variants associated with a trait of interest.

Key Experimental Protocol: GWAS Summary Statistics Generation

- Cohort Ascertainment: Assemble a large, phenotypically well-characterized case-control or quantitative trait cohort.

- Genotyping & Imputation: Perform high-density genotyping (e.g., using Illumina or Affymetrix arrays), followed by imputation to a reference panel (e.g., 1000 Genomes, gnomAD) to infer missing genotypes.

- Quality Control (QC): Apply stringent filters: per-SNP call rate >98%, minor allele frequency (MAF) >1%, Hardy-Weinberg equilibrium p > 1x10^-6; per-sample call rate >97%, heterozygosity outliers removed, genetic sex checks, relatedness filtering (remove one from each pair with PI_HAT > 0.2).

- Association Testing: For each variant, perform a logistic (for case-control) or linear (for quantitative) regression, adjusting for principal components (PCs) to account for population stratification.

- Summary Statistics Output: Generate a standardized file containing SNP ID (rsID), chromosome, position, effect/alternate allele, other allele, effect size (beta or odds ratio), standard error, p-value, and MAF.

Table 1: Representative QC Metrics from a GWAS for AUC Modeling

| Metric | Threshold | Typical Post-QC Yield |

|---|---|---|

| Sample Call Rate | > 97% | > 99% |

| SNP Call Rate | > 98% | > 99% |

| Minor Allele Frequency (MAF) | > 0.01 | 4-6 million SNPs |

| Hardy-Weinberg P-value | > 1x10^-6 | > 99.9% of SNPs pass |

| Genomic Inflation Factor (λ) | < 1.05 | ~1.02 (well-controlled) |

Phenotype Data: Defining the Outcome

Accurate, precise, and consistently measured phenotype data is critical for both training the model and evaluating its predictive performance via AUC.

Key Experimental Protocol: Phenotype Standardization for HGI Studies

- Phenotype Definition: Operationally define the trait using clinical guidelines (e.g., ICD codes), continuous biomarkers (e.g., HbA1c), or standardized questionnaires. Strata (e.g., severe vs. moderate) may be defined.

- Data Harmonization: Across consortium sites, implement standardized data collection protocols (SOPs) and common data models (e.g., OMOP CDM) to minimize heterogeneity.

- Covariate Adjustment: Systematically collect covariates (age, sex, genetic PCs, relevant clinical confounders) for inclusion in the GWAS model to generate "clean" association signals.

- Trait Transformation: For quantitative traits, apply appropriate transformations (e.g., inverse normal rank) to ensure residuals approximate a normal distribution for linear regression.

The Prediction Model: Integration and Calculation

The prediction model, most commonly a Polygenic Risk Score (PRS), integrates the first two components to estimate an individual's genetic propensity.

Key Experimental Protocol: PRS Construction and AUC Evaluation

- Base/Target Data Split: Use independent cohorts for discovery (base GWAS) and prediction (target sample).

- Clumping and Thresholding (C+T):

- Clumping: In the target sample or a reference panel, identify LD-independent SNPs. Retain the most significant SNP within a 250kb window (r^2 threshold typically 0.1).

- P-value Thresholding: Select SNPs from the base GWAS that meet a series of significance thresholds (e.g., p < 5x10^-8, 1x10^-5, 0.001, 0.1, 0.5, 1).

- Score Calculation: In the target sample, for each individual, calculate: PRS = Σ (βi * Gij), where βi is the effect size for SNP *i* from the base GWAS, and Gij is the allele count (0,1,2) for SNP i in individual j.

- AUC Evaluation: Fit a logistic regression model with the actual phenotype as the outcome and the PRS (plus necessary covariates like PCs) as the predictor. Use the predicted probabilities to generate a Receiver Operating Characteristic (ROC) curve. The Area Under this Curve (AUC) quantifies the model's discriminative accuracy.

Table 2: Comparative AUC Performance of PRS Across Selected Complex Traits

| Trait | Base GWAS Sample Size | Number of SNPs in PRS | AUC in Independent Cohort |

|---|---|---|---|

| Coronary Artery Disease | ~1 Million | ~1.5 Million | 0.75 - 0.80 |

| Type 2 Diabetes | ~900,000 | ~1.2 Million | 0.70 - 0.75 |

| Major Depressive Disorder | ~500,000 | ~800,000 | 0.58 - 0.62 |

| Breast Cancer | ~300,000 | ~10,000 (GWAS Sig.) | 0.65 - 0.70 |

Title: Polygenic Risk Score Calculation and AUC Evaluation Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for HGI AUC Research

| Item | Function in HGI/PRS Research |

|---|---|

| Genotyping Array (e.g., Illumina Global Screening Array) | High-throughput, cost-effective genome-wide SNP genotyping for large cohorts. |

| Imputation Server/Software (e.g., Michigan Imputation Server, Minimac4) | Infers ungenotyped variants using large reference haplotypes, increasing variant density. |

| GWAS QC & Analysis Pipeline (e.g., PLINK, SAIGE, REGENIE) | Performs quality control, population stratification correction, and association testing. |

| LD Reference Panel (e.g., 1000 Genomes, UK Biobank haplotypes) | Provides population-specific linkage disequilibrium structure for clumping and imputation. |

| PRS Construction Software (e.g., PRSice-2, plink --score, LDpred2) | Implements C+T, Bayesian, or machine learning methods for optimal PRS calculation. |

| AUC Calculation Library (e.g., pROC in R, sklearn.metrics in Python) | Computes the ROC curve and AUC with confidence intervals for performance evaluation. |

Title: Core Component Integration in HGI Research

Advanced Modeling Considerations

Moving beyond C+T, modern HGI AUC research employs sophisticated methods:

- LDpred2 / PRS-CS: Bayesian methods that adjust SNP weights for linkage disequilibrium, improving AUC, especially for highly polygenic traits.

- MTAG / GWAS Meta-analysis: Integrates GWAS summary statistics across related traits to boost discovery and improve predictive power.

- Cross-Population AUC Analysis: Highlights the critical need for diverse genetic datasets, as PRS trained in one population often shows reduced AUC in others due to differing LD and allele frequencies.

The iterative refinement of the triad—genetic variants, phenotype data, and prediction models—directly drives improvements in the AUC metric, a key benchmark in HGI research. For drug development professionals, understanding these components informs target validation, patient stratification, and clinical trial design, bridging genetic discovery and therapeutic application.

Distinguishing HGI-AUC from Other Genetic Metrics (e.g., P-value, Odds Ratio)

Within the expanding field of statistical genetics and genomic prediction, the evaluation of polygenic scores (PGS) for complex traits demands metrics that capture predictive performance across the entire allele frequency and effect size spectrum. The HGI-AUC (Heritability-Governed Integration Area Under the Curve) has emerged as a specialized metric within recent research on HGI AUC calculation. Unlike traditional association metrics like P-value and Odds Ratio, HGI-AUC is designed to quantify the aggregate discriminative accuracy of a PGS, specifically by integrating trait heritability constraints to prevent overestimation from winner’s curse. This whitepaper provides a technical guide to distinguish HGI-AUC from foundational genetic association metrics, detailing its calculation, application, and complementary role in therapeutic target identification.

Foundational Genetic Metrics: P-value and Odds Ratio

P-value

The P-value measures the probability of observing the obtained data (or more extreme data) if the null hypothesis (no association between genetic variant and trait) is true. It is a measure of statistical significance, not effect size or predictive power.

Odds Ratio (OR)

The Odds Ratio quantifies the strength and direction of association between an allele and a binary outcome (e.g., disease case vs. control). It represents the odds of disease given the risk allele relative to the odds given the non-risk allele.

Table 1: Comparison of Core Single-Variant Association Metrics

| Metric | Purpose | Scale | Interpretation | Key Limitation |

|---|---|---|---|---|

| P-value | Statistical significance testing. | 0 to 1. | Probability under null. Lower = more significant. | Does not convey effect size or biological importance. |

| Odds Ratio (OR) | Effect size for binary traits. | 0 to ∞. 1 = no effect. >1 = risk, <1 = protective. | Strength of association per allele. | Susceptible to ascertainment bias; limited to binary traits. |

| HGI-AUC | Predictive performance of a polygenic score. | 0.5 (random) to 1.0 (perfect). | Integrated discriminative accuracy across spectrum. | Requires large, well-phenotyped cohorts and heritability estimates. |

HGI-AUC: A Polygenic Performance Metric

HGI-AUC is not a single-variant statistic. It is a composite metric that evaluates the predictive performance of a multi-variant model—typically a polygenic score—by calculating the Area Under the Receiver Operating Characteristic (ROC) Curve, with critical adjustments governed by the trait's heritability architecture.

Core Conceptual Framework

The HGI framework posits that the predictive capacity of a PGS is bounded by the trait's heritability (h²). The standard AUC from a PGS can be inflated in discovery samples due to overfitting. HGI-AUC integrates a heritability-aware shrinkage, often using linkage disequilibrium (LD) information and heritability estimates (e.g., from LD Score regression) to calibrate effect sizes before AUC calculation, providing a more realistic out-of-sample performance estimate.

Experimental Protocol for HGI-AUC Calculation

A standard workflow for computing HGI-AUC in a research setting is detailed below.

Protocol: Computing HGI-AUC for a Complex Disease Trait

Input Data Preparation:

- GWAS Summary Statistics: Obtain effect sizes (beta/OR), standard errors, and P-values for variants from a large-scale discovery GWAS.

- LD Reference Matrix: Acquire a population-matched LD matrix (e.g., from 1000 Genomes Project).

- Heritability Estimate: Calculate or obtain a reliable SNP-based heritability (h²_snp) estimate for the trait using tools like LDSC or GCTA.

- Target Genotype & Phenotype Data: Prepare an independent cohort with individual-level genotype data and corresponding phenotype (case/control or quantitative).

Polygenic Score Construction with HGI Calibration:

- Clumping & Thresholding: Perform variant clumping (e.g., r² < 0.1 within 250kb window) on GWAS data to select independent SNPs.

- Effect Size Shrinkage: Apply a heritability-constrained shrinkage method. A common approach is using an empirical Bayes or LD-adjusted method (e.g., PRS-CS, LDpred2) that uses the h²_snp as a global prior to adjust SNP weights, minimizing overfitting.

- Calculate PGS: For each individual in the target cohort, compute the PGS as the sum of allele counts weighted by the shrunken effect sizes.

AUC Calculation & HGI Integration:

- Model Fitting: Regress the phenotype against the PGS (with covariates like principal components) using logistic regression (for binary traits).

- ROC Generation: Generate the ROC curve by plotting the True Positive Rate against the False Positive Rate at various PGS probability thresholds.

- AUC Integration: Calculate the area under the ROC curve using the trapezoidal rule. This is the HGI-AUC—the AUC derived from the heritability-calibrated PGS.

Validation: Perform the calculation in multiple independent target cohorts or via cross-validation and report the mean and standard deviation of the HGI-AUC.

Diagram 1: HGI-AUC Calculation Workflow

The Scientist's Toolkit: Key Research Reagents & Materials

Table 2: Essential Research Reagent Solutions for HGI-AUC Experiments

| Item | Function / Description | Example Source / Tool |

|---|---|---|

| GWAS Summary Statistics | Base data containing variant-trait associations. | Public repositories: GWAS Catalog, PGS Catalog, or consortium databases. |

| LD Reference Panel | Provides linkage disequilibrium structure for calibration. | 1000 Genomes Project, UK Biobank, or population-specific panels. |

| Genotyping Array / Imputation Software | To obtain variant data for the target cohort. | Illumina Global Screening Array, Affymetrix; Minimac4, IMPUTE5. |

| Heritability Estimation Software | Calculates SNP-based heritability prior. | LD Score Regression (LDSC), GCTA-GREML. |

| PGS Shrinkage/Calibration Software | Applies heritability constraints to effect sizes. | PRS-CS, LDpred2, SBayesR. |

| Statistical Computing Environment | Platform for data processing, modeling, and AUC calculation. | R (pROC, PRSice2), Python (scikit-learn, numpy). |

| High-Performance Computing (HPC) Cluster | Handles computationally intensive steps (LD pruning, large-scale regression). | Institutional HPC or cloud computing (AWS, Google Cloud). |

Comparative Analysis via a Hypothetical Study

Consider a GWAS for Coronary Artery Disease (CAD) with 10 million SNPs.

- Top SNP:

rs12345has P = 3.2e-08 (significant) and OR = 1.18 (modest risk effect). - PGS Performance: A PGS built from 80,000 SNPs using unadjusted GWAS effect sizes yields an apparent AUC = 0.71 in the discovery sample.

- HGI-AUC Performance: The same PGS, after heritability-guided shrinkage using an h²_snp of 0.25, yields HGI-AUC = 0.65 in an independent validation cohort.

Table 3: Metric Outputs in a Hypothetical CAD Study

| Analysis Level | Specific Metric | Value | Interpretation in Context |

|---|---|---|---|

| Single-Variant | P-value for rs12345 |

3.2e-08 | Genome-wide significant hit. |

| Single-Variant | Odds Ratio for rs12345 |

1.18 | Each copy increases odds of CAD by 18%. |

| Polygenic (Naïve) | Apparent AUC (Discovery) | 0.71 | Overly optimistic due to overfitting. |

| Polygenic (Robust) | HGI-AUC (Validation) | 0.65 | Realistic clinical discriminative accuracy. |

Diagram 2: Logical Relationship Between Genetic Metrics

P-values and Odds Ratios are fundamental for identifying and characterizing individual genetic associations. In contrast, HGI-AUC operates at a higher level of integration, serving as a critical validation metric for the clinical and predictive utility of polygenic models. By explicitly incorporating trait heritability, HGI-AUC provides a conservative, realistic estimate of discriminative accuracy, which is indispensable for evaluating the potential of PGS in stratified medicine and drug development pipelines. Within the thesis of HGI-AUC calculation research, it represents the essential bridge between statistical association and actionable genetic prediction.

Step-by-Step: Calculating HGI-AUC for Target Prioritization and Validation

Genome-wide association studies (GWAS) in the Human Genetic Informatics (HGI) domain, particularly for complex quantitative traits like area under the curve (AUC) measurements from pharmacological or metabolic challenges, demand stringent data preprocessing. The accuracy of downstream genetic association estimates for AUC phenotypes hinges on the quality and structure of three core data matrices: genotype, phenotype, and covariates. This guide details the technical preparation of these matrices, framing it as a foundational step for robust HGI analysis of dose-response dynamics.

Core Data Matrices: Definitions and Specifications

The following table summarizes the essential characteristics and preparation goals for each core matrix.

Table 1: Specification of Core Data Matrices for HGI AUC Analysis

| Matrix | Primary Content | Format (Typical) | Key Preparation Goals | Relevance to AUC Phenotype |

|---|---|---|---|---|

| Genotype | Biallelic SNP dosages (0,1,2) or probabilities. Subjects x Variants. | PLINK (.bed/.bim/.fam), VCF, or numeric matrix. | Quality control (QC), imputation, alignment to reference genome, variant annotation. | Provides genetic independent variables for association testing. |

| Phenotype | Primary trait(s) of interest; here, the computed AUC values. Subjects x Phenotypes. | Tab-delimited or CSV file with subject IDs. | Accurate AUC calculation, normalization, outlier handling, ensuring matched subject IDs. | The primary dependent quantitative variable for genetic association. |

| Covariate | Variables to control for confounding (e.g., age, sex, principal components). Subjects x Covariates. | Tab-delimited or CSV file with subject IDs. | Collection of relevant confounders, encoding (e.g., categorical), scaling if needed. | Reduces false positives by accounting for non-genetic variance in AUC. |

Detailed Methodologies for Matrix Preparation

Genotype Matrix Preparation Protocol

Objective: To generate a clean, imputed, and analysis-ready genotype matrix.

- Initial Quality Control (QC): Use tools like PLINK or R/Bioconductor packages.

- Sample-level QC: Remove subjects with call rate < 98%, excessive heterozygosity, or sex discrepancies.

- Variant-level QC: Exclude SNPs with call rate < 95%, minor allele frequency (MAF) < 1%, and significant deviation from Hardy-Weinberg Equilibrium (HWE p < 1e-6).

- Genotype Imputation: Leverage reference panels (e.g., TOPMed, 1000 Genomes).

- Prephasing: Use Eagle or SHAPEIT to estimate haplotype phases.

- Imputation: Submit phased data to a server like Michigan Imputation Server or use tools like Minimac4/Beagle5. Filter output for imputation quality (R² > 0.3).

- Post-Imputation QC: Filter imputed variants for MAF > 1% and imputation quality R² > 0.8. Convert dosages to best-guess genotypes if needed.

- Annotation: Use ANNOVAR or snpEff to annotate variant consequences (e.g., missense, intergenic).

Phenotype Matrix Preparation (AUC Focus)

Objective: To compute and prepare a normalized AUC phenotype matrix.

- Raw Data Acquisition: Obtain longitudinal measurements (e.g., blood concentration, glucose level) at multiple time points post-intervention.

- AUC Calculation: Employ the trapezoidal rule.

- For time points

(t₁, t₂, ..., tₙ)and measurements(y₁, y₂, ..., yₙ):AUC = Σᵢ₌₁ⁿ⁻¹ ½ * (yᵢ + yᵢ₊₁) * (tᵢ₊₁ - tᵢ)

- Consider standardizing for time interval if cohorts differ.

- For time points

- Phenotype Transformation: Assess normality (Shapiro-Wilk test). Apply inverse normal rank transformation (INT) to the AUC values across the sample to mitigate outlier effects:

AUC_transformed = Φ⁻¹((rank(AUC) - 0.5) / N). - Outlier Handling: Winsorize extreme values (>4 SD from mean) prior to INT if necessary.

Covariate Matrix Preparation Protocol

Objective: To assemble a matrix that captures major sources of non-genetic variance.

- Data Collection: Compile demographic (age, sex, study center), technical (batch, array type), and biological (relevant clinical indices) data.

- Population Stratification Control: Calculate genetic principal components (PCs) from high-quality, LD-pruned genotype data.

- Use PLINK's

--pcacommand on a subset of independent, common variants. - Include the top 10 PCs as standard covariates in the matrix.

- Use PLINK's

- Encoding: Represent categorical variables (e.g., sex, batch) as dummy or effect-coded variables. Center and scale continuous covariates (e.g., age) to mean=0, SD=1.

- Integration: Merge all covariates into a single matrix with rows perfectly aligned to the genotype and phenotype matrices by subject ID.

Sample Overlap and Final Merging Protocol

- ID Matching: Ensure a consistent subject identifier across all three matrices. Use tools like R or Python to perform an inner join, retaining only subjects present in all three files.

- Order Synchronization: Sort all three matrices in identical subject order.

- Final Check: Verify dimensions: If

Nis the final sample size,Mis variant count, andCis covariate count, then:- Genotype:

N x M - Phenotype:

N x 1(for single AUC trait) - Covariate:

N x C

- Genotype:

Visualizing the Data Preparation Workflow

Title: Workflow for Genotype, Phenotype, and Covariate Matrix Prep

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools and Reagents for Data Preparation

| Item | Category | Primary Function in Preparation |

|---|---|---|

| PLINK 2.0 | Software Tool | Core toolkit for genotype QC, filtering, format conversion, and basic association testing. |

| Michigan Imputation Server | Web Service | High-accuracy genotype imputation service using TOPMed/1000G reference panels. |

| R/Bioconductor (qqman, SNPRelate) | Software Environment | Statistical computing for phenotype transformation, covariate management, and advanced genetic analyses. |

| Eagle/Shapeit | Software Tool | Perform haplotype phasing, a critical step prior to imputation for accuracy. |

| Trapezoidal Rule Script | Custom Code | Calculate AUC from longitudinal measurements; often implemented in R or Python. |

| ANNOVAR/snpEff | Software Tool | Functional annotation of genetic variants post-QC and imputation. |

| High-Performance Computing (HPC) Cluster | Infrastructure | Provides necessary computational power for genotype imputation and large-scale matrix operations. |

| Structured Clinical Database | Data Resource | Source for accurate demographic and clinical covariates, integral to the covariate matrix. |

The development of Polygenic Risk Scores (PRS) represents a cornerstone of statistical genetics, enabling the quantification of an individual's genetic liability for complex traits and diseases. Within the broader thesis on the Human Genetic Informatics (HGI) area under the curve (AUC) calculation research, this guide details the technical construction of PRS models. The primary objective is to maximize predictive accuracy, quantified by metrics like the AUC, which measures the model's ability to discriminate between cases and controls. Advancements beyond traditional PRS, including functional annotation weighting and machine learning integration, are explored for their potential to enhance the AUC in downstream translational applications for target identification and patient stratification in drug development.

Foundational PRS Calculation

The basic PRS for an individual is the weighted sum of their risk allele counts:

PRS_i = Σ (β_j * G_ij)

where β_j is the estimated effect size of SNP j from a genome-wide association study (GWAS), and G_ij is the allele dosage (0, 1, 2) for individual i at SNP j.

Table 1: Key Performance Metrics for PRS in Common Diseases

| Disease/Trait | Typical PRS AUC Range | Variance Explained (R²) | Top Performing Method (2023-2024) | Key Challenges |

|---|---|---|---|---|

| Coronary Artery Disease | 0.65 - 0.75 | 10-15% | LDpred2 / PRS-CS-auto | LD heterogeneity |

| Type 2 Diabetes | 0.60 - 0.70 | 8-12% | PRS-CS | Ancestry disparity |

| Schizophrenia | 0.65 - 0.72 | 7-10% | SBayesS | Rare variant contribution |

| Breast Cancer | 0.63 - 0.68 | 5-9% | Combined Annotation-Dependent PRS | Pathway-specific effects |

Advanced Model-Building Techniques

Table 2: Comparison of Modern PRS Construction Methods

| Method | Core Principle | Computational Demand | Handles LD? | Best for AUC in... |

|---|---|---|---|---|

| Clumping & Thresholding (C+T) | Selects independent, genome-wide significant SNPs. | Low | Yes, via clumping | Initial benchmarking |

| LDpred / LDpred2 | Uses Bayesian shrinkage with LD reference. | High | Yes, explicitly | Diverse ancestries (with matched LD ref) |

| PRS-CS | Employs a continuous shrinkage prior (global-local). | Medium | Yes, via LD matrix | Large-scale biobank data |

| SBayesS | Integrates GWAS and SNP heritability models. | Medium | Yes | Traits with complex genetic architectures |

| PGS-Catalog Methods | Uses pre-computed scores from meta-analyses. | Very Low | Pre-adjusted | Rapid clinical translation |

Detailed Experimental Protocols

Protocol: Standardized PRS Development & AUC Evaluation

This protocol ensures reproducible model building and fair assessment of predictive performance (AUC).

A. Data Preparation & QC

- Base GWAS Data: Obtain summary statistics (

SNP, A1, A2, BETA, P). Apply QC: remove SNPs with INFO<0.9, MAF<0.01, ambiguous alleles, or poor imputation. - Target Genotype Data: Use phased, imputed data (e.g., UK Biobank, All of Us). Apply standard QC: sample call rate >98%, SNP call rate >99%, HWE p>1e-6, heterozygosity outliers removed.

- LD Reference Panel: Match ancestrally to target data (e.g., 1000 Genomes, gnomAD). Extract relevant population subset.

B. PRS Model Construction (using PRS-CS-auto as example)

- Harmonization: Align base and target data alleles. Flip strand and signs to ensure consistent effect allele.

- LD Pruning: For C+T method, use

plink --clumpwith standard parameters (clump-p1 5e-8, clump-r2 0.1, clump-kb 250). - Automated Shrinkage (PRS-CS-auto):

C. Model Evaluation & AUC Calculation

- Score Calculation: Apply generated weights to the target genotype.

Phenotype Regression: Fit a logistic/linear regression of the phenotype on the PRS, adjusting for principal components (PCs) and covariates.

AUC Computation: Calculate the Area Under the ROC Curve.

Validation: Perform the evaluation in a strictly held-out test set or via cross-validation to avoid overfitting.

Protocol: Functional PRS Enhancement Using Annotations

This protocol integrates functional genomic data to improve biological relevance and potential AUC.

- Annotation Gathering: Collect SNP-level functional scores (e.g., CADD, Eigen, chromatin state, conserved regions) from repositories like ANNOVAR or UCSC.

Annotation-Based Weighting: Use methods like LDAK or AnnoPred to re-weight SNP effects based on functional importance.

Tissue-Specific PRS: Construct PRS using eQTL/GWAS colocalization weights from disease-relevant tissues (e.g., use PsychENCODE weights for psychiatric disorders).

- Evaluation: Compare the AUC of the functionally-weighted PRS against the baseline model using a likelihood-ratio test or DeLong's test for ROC curves.

Visualization: Pathways and Workflows

Diagram 1: PRS Construction & Evaluation Pipeline

Diagram 2: HGI AUC Optimization Feedback Loop

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools & Reagents for PRS Research

| Item | Function/Description | Example Vendor/Software |

|---|---|---|

| High-Quality GWAS Summary Statistics | Base data for effect size (β) estimation. Must have large sample size and careful QC. | GWAS Catalog, PGS Catalog, IBD Genetics, FinnGen |

| Phased Genotype Array/WGS Data | Target individual-level data for score calculation. Requires imputation to a dense reference panel. | UK Biobank, All of Us, TOPMed, gnomAD |

| Population-Matched LD Reference | Panel to account for Linkage Disequilibrium during model fitting. Critical for portability. | 1000 Genomes Project, HRC, TOPMed, population-specific panels |

| Functional Genome Annotation Sets | Data for biologically-informed weighting (e.g., regulatory marks, conservation). | ENCODE, Roadmap Epigenomics, GenoSkyline, CADD scores |

| PRS Construction Software | Tools implementing key algorithms for score generation. | plink 2.0 (C+T), PRSice-2, LDpred2, PRS-CS, LDAK |

| Statistical Analysis Environment | Platform for regression modeling, AUC/ROC analysis, and visualization. | R (pROC, ggplot2), Python (scikit-learn, numpy, pandas) |

| Cloud/High-Performance Compute (HPC) | Essential for running compute-intensive methods (Bayesian shrinkage, large-scale QC). | AWS, Google Cloud, SLURM-based HPC clusters |

Within the context of Human Genetic Initiative (HGI) research on area under the curve (AUC) calculation, the robust evaluation of polygenic risk scores (PRS) or diagnostic biomarkers is paramount. This guide details the computational pipeline for generating probabilistic predictions and constructing the Receiver Operating Characteristic (ROC) curve, the foundational tool for AUC determination.

The Prediction Generation Pipeline

The pipeline transforms raw genetic or biomarker data into a probabilistic prediction of case/control status or disease risk.

Core Computational Steps

Step 1: Model Training & Coefficient Estimation A statistical model (e.g., logistic regression, Cox proportional hazards) is trained on a held-out training cohort. For PRS, this often involves pruning and thresholding followed by the summation of allele counts weighted by effect sizes derived from genome-wide association studies (GWAS).

Step 2: Linear Predictor Calculation

For each sample i in the target validation cohort, a linear predictor (LP) is computed:

LP_i = β_0 + Σ(β_j * X_ij) where β_j are estimated coefficients and X_ij are predictor values.

Step 3: Probability Transformation

The linear predictor is converted to a probability via a link function. For logistic regression, the sigmoid function is used:

P(Y_i=1) = 1 / (1 + exp(-LP_i))

Key Quantitative Metrics from Prediction Generation

Table 1: Summary Statistics of Generated Predictions in a Sample Validation Cohort (N=10,000)

| Metric | Value | Description |

|---|---|---|

| Number of Cases | 1,500 | True positive disease status count. |

| Number of Controls | 8,500 | True negative status count. |

| Mean Predicted Probability (Cases) | 0.42 | Average risk score for true cases. |

| Mean Predicted Probability (Controls) | 0.15 | Average risk score for true controls. |

| Standard Deviation of Predictions | 0.22 | Measure of prediction dispersion. |

| C-statistic (Training) | 0.81 | Model discrimination in training set. |

Title: Data flow for generating sample predictions.

Constructing the ROC Curve

The ROC curve visualizes the diagnostic ability of a binary classifier across all classification thresholds.

Experimental Protocol for ROC Construction

Protocol: ROC Curve Generation from Probabilistic Predictions

- Input: A vector of true binary labels (Y) and a corresponding vector of predicted probabilities (P) for a validation cohort.

- Threshold Definition: Define a sequence of classification thresholds (T) from 0 to 1 (e.g., increments of 0.01). For each threshold t in T:

a. Classify: Assign all samples with P ≥ t as "Predicted Positive" and P < t as "Predicted Negative".

b. Calculate Rates: Compute the True Positive Rate (TPR/Sensitivity) and False Positive Rate (FPR/1-Specificity):

*

TPR(t) = TP(t) / (TP(t) + FN(t))*FPR(t) = FP(t) / (FP(t) + TN(t)) - Curve Plotting: Plot FPR(t) on the x-axis against TPR(t) on the y-axis for all thresholds t.

- AUC Calculation: Calculate the area under the plotted ROC curve using the trapezoidal rule or statistical software.

Quantitative Data from ROC Analysis

Table 2: Performance at Optimal Threshold (Youden's Index)

| Metric | Formula | Calculated Value |

|---|---|---|

| Optimal Threshold | Argmax(Sensitivity + Specificity - 1) | 0.32 |

| Sensitivity (TPR) | TP / (TP + FN) | 0.85 |

| Specificity | TN / (TN + FP) | 0.82 |

| False Positive Rate (FPR) | 1 - Specificity | 0.18 |

| Positive Predictive Value (PPV) | TP / (TP + FP) | 0.45 |

| Negative Predictive Value (NPV) | TN / (TN + FN) | 0.97 |

Table 3: AUC Comparison Across Models in HGI Study

| Model / PRS Method | Cohort Size | AUC Estimate | 95% Confidence Interval |

|---|---|---|---|

| Standard Clumping & Thresholding | 100,000 | 0.78 | [0.77, 0.79] |

| LD Pred | 100,000 | 0.82 | [0.81, 0.83] |

| Bayesian Polygenic Regression | 100,000 | 0.84 | [0.83, 0.85] |

| Clinical Covariates Only | 100,000 | 0.65 | [0.64, 0.66] |

| Combined (PRS + Covariates) | 100,000 | 0.86 | [0.85, 0.87] |

Title: Workflow for constructing an ROC curve from predictions.

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Computational Tools for Prediction & ROC Analysis

| Item / Solution | Function in the Pipeline | Example (Not Endorsement) |

|---|---|---|

| GWAS Summary Statistics | Source of genetic effect sizes (β) for PRS construction. | HGI consortium meta-analysis files. |

| Genotype Plink Files | Standard format for individual-level genetic data in validation cohort. | PLINK 1.9 .bed/.bim/.fam. |

| PRS Calculation Software | Applies weights to genotypes to compute per-individual scores. | PRSice-2, PLINK2, LDpred2. |

| Statistical Programming Environment | Platform for model fitting, probability calculation, and analysis. | R (pROC, ggplot2) or Python (scikit-learn, matplotlib). |

| High-Performance Computing (HPC) Cluster | Enables large-scale model training and bootstrap validation. | SLURM-managed cluster with parallel processing. |

| Bioinformatics Pipelines | Orchestrates QC, imputation, and analysis steps reproducibly. | Nextflow/Snakemake workflows for PRS. |

| Bootstrap Resampling Scripts | Generates confidence intervals for AUC and other metrics. | Custom R/Python code for 10,000 iterations. |

Within the broader research thesis on Human Genetic Intelligence (HGI) and pharmacodynamic biomarker analysis, the precise calculation of the Area Under the Curve (AUC) is paramount. AUC quantifies total systemic exposure or cumulative effect over time, serving as a critical endpoint in dose-response studies, pharmacokinetic (PK) profiling, and biomarker trajectory analysis in HGI-linked cognitive pharmacotherapy development. While analytical integration of the concentration-time function is ideal, empirical data from HGI biomarker assays or drug concentration measurements are discrete. This necessitates robust numerical integration methods, among which the Trapezoidal Rule stands as a fundamental, widely adopted technique in scientific computing and biostatistics.

The Trapezoidal Rule: Mathematical Foundation

The Trapezoidal Rule approximates the definite integral of a function ( f(x) ) over the interval ([a, b]) by dividing the area into (n) trapezoids. For a set of (n+1) discrete data points ((x0, y0), (x1, y1), ..., (xn, yn)), where (x0 = a), (xn = b), and (x_i) are in ascending order, the AUC is approximated as:

[ \text{AUC} \approx \sum{i=1}^{n} \frac{(y{i-1} + yi)}{2} \cdot (xi - x_{i-1}) ]

For equally spaced time points with interval (h), the formula simplifies to:

[ \text{AUC} \approx \frac{h}{2} [y0 + 2y1 + 2y2 + ... + 2y{n-1} + y_n] ]

This rule is a specific case of Newton-Cotes formulas and provides an exact result for linear functions. The error is generally proportional to ((b-a)^3 / n^2), indicating improved accuracy with finer sampling intervals.

Application in Pharmacokinetics: Protocol for AUC({0-t}) and AUC({0-\infty}) Calculation

A standard PK analysis protocol for computing AUC using the Trapezoidal Rule involves the following steps:

- Data Collection: Serially collect blood samples at pre-defined time points (e.g., 0, 0.5, 1, 2, 4, 8, 12, 24 hours) post-drug administration. Quantify plasma drug concentration ((C_t)) at each time point ((t)) using a validated bioanalytical method (e.g., LC-MS/MS).

- Data Preparation: Tabulate time (independent variable) and concentration (dependent variable) pairs. Ensure time is in consistent units.

- Linear Trapezoidal Rule Application: For each consecutive pair of points, calculate the partial AUC: [ \text{Partial AUC}{t{i-1} \to ti} = \frac{(C{i-1} + Ci)}{2} \cdot (ti - t_{i-1}) ]

- Summation: Sum all partial AUCs from time zero to the last measurable concentration time (t{last}) to compute (\text{AUC}{0-t}).

- Extrapolation to Infinity: To estimate (\text{AUC}{0-\infty}), add the extrapolated area from (t{last}) to infinity: [ \text{AUC}{0-\infty} = \text{AUC}{0-t} + \frac{C{last}}{\lambdaz} ] where (C{last}) is the last measurable concentration and (\lambdaz) is the terminal elimination rate constant, estimated via linear regression of the log-concentration versus time curve during the terminal phase.

Table 1: Example PK AUC Calculation for a Hypothetical HGI Candidate Drug (Dose: 100 mg)

| Time (h) | Concentration (ng/mL) | Partial AUC (ng·h/mL) | Cumulative AUC (ng·h/mL) |

|---|---|---|---|

| 0.0 | 0.0 | 0.00 | 0.00 |

| 0.5 | 45.2 | 11.30 | 11.30 |

| 1.0 | 78.9 | 31.03 | 42.33 |

| 2.0 | 112.5 | 95.70 | 138.03 |

| 4.0 | 96.8 | 209.30 | 347.33 |

| 8.0 | 52.4 | 298.40 | 645.73 |

| 12.0 | 28.7 | 162.20 | 807.93 |

| 24.0 | 5.1 | 101.60 | 909.53 (AUC₀₂₄) |

| Extrap. | (λz=0.115 h⁻¹) | 44.35 | 953.88 (AUC₀∞) |

Comparative Analysis of Numerical Integration Methods

The choice of integration method can impact AUC accuracy, especially with sparse or variable data.

Table 2: Comparison of Common Numerical Integration Methods for AUC

| Method | Principle | Advantages | Limitations | Best For |

|---|---|---|---|---|

| Linear Trapezoidal | Approximates area as series of linear trapezoids. | Simple, intuitive, standard in PK. | Overestimates for convex curves; underestimates for concave curves. | Dense, linear-phase data. |

| Log-Linear Trapezoidal | Uses linear interpolation on log-scale between points. | More accurate for exponential elimination phases. | More complex; requires positive concentrations. | Sparse data during mono-exponential decay phases. |

| Spline Integration | Fits a smooth polynomial (cubic spline) through all data points. | Can provide a superior fit for complex profiles. | Risk of overfitting/oscillation with sparse data. | Dense data with known smooth, non-linear behavior. |

| Lagrangian Polynomial | Fits a single polynomial through all points (Newton-Cotes). | High accuracy for smooth functions. | Unstable with high-degree polynomials (Runge's phenomenon). | Not typically recommended for standard PK. |

Experimental Workflow for HGI Biomarker Response AUC

The following diagram illustrates the complete experimental and computational workflow for determining the AUC of a cognitive biomarker response in an HGI pharmacodynamic study.

Workflow for HGI Biomarker AUC Analysis

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Research Reagent Solutions for AUC-Related Experiments

| Item / Reagent | Function in AUC Determination |

|---|---|

| Validated Bioanalytical Assay Kit(e.g., ELISA, MSD, LC-MS/MS protocol) | Quantifies analyte concentration (drug, biomarker) in biological matrices with specificity, accuracy, and precision. |

| Certified Reference Standard & Isotope-Labeled Internal Standard | Enables calibration curve generation and correction for matrix effects/recovery in quantitative mass spectrometry. |

| Quality Control (QC) Samples(Low, Mid, High concentration) | Monitors assay performance and validates the integrity of concentration data used for AUC integration. |

| Stabilizing Agent(e.g., Protease/Phosphatase Inhibitor Cocktail) | Preserves biomarker integrity in collected samples (e.g., blood, CSF) between time of collection and analysis. |

| Statistical & PK/PD Analysis Software(e.g., Phoenix WinNonlin, R, PKNCA) | Performs trapezoidal rule integration, non-compartmental analysis, and generates standardized AUC outputs. |

| Laboratory Information Management System (LIMS) | Tracks sample chain of custody and links sample ID to time point, ensuring correct temporal sequence for AUC calculation. |

Advanced Considerations and Limitations

- Choosing Linear vs. Log-Linear: Regulatory guidelines (e.g., FDA, EMA) often specify the use of the linear trapezoidal rule for ascending concentrations and the log-linear rule for the descending, elimination phase. This hybrid approach minimizes bias.

- Impact of Sampling Design: Sparse sampling can lead to significant AUC estimation error. Optimal sampling timepoint selection (D-optimal design) is a critical component of prospective HGI study design.

- Baseline Correction: For response-over-baseline AUC (AUEC), accurate determination and subtraction of the pre-dose baseline is crucial. Protocols must define baseline calculation (single point vs. average of multiple pre-dose measurements).

- Partial AUCs: In HGI research, analyzing AUC for specific intervals (e.g., AUC(_{0-4h}) for early cognitive response) can be more informative than total AUC, isolating specific temporal phases of response.

The trapezoidal rule remains the cornerstone numerical integration method for AUC computation in life science research, including cutting-edge HGI pharmacodynamic studies. Its implementation, while mathematically straightforward, requires careful attention to experimental protocol, bioanalytical data quality, and appropriate application rules (linear vs. log-linear). When executed within a rigorous workflow—from stratified cohort design to final statistical comparison—it yields the robust, quantitative exposure-response metrics essential for advancing thesis research in HGI and rational drug development.

Within the broader thesis on Human Genetic Intervention (HGI) area under the curve (AUC) calculation research, the quantification of target validity emerges as a critical, rate-limiting step. This whitepaper details a systematic framework for translating human genetic evidence into a quantifiable risk score, enabling objective prioritization of drug discovery programs. The core hypothesis is that integrating HGI-derived AUC metrics with orthogonal functional datasets generates a composite target validity score that robustly predicts clinical success probability.

Core Framework: The Target Validity AUC (TV-AUC)

The proposed framework consolidates evidence into a single, weighted metric. The Target Validity AUC (TV-AUC) integrates four principal evidence pillars, each contributing a sub-score (S) from 0-1, weighted (W) by predictive strength.

Table 1: Pillars of Target Validity AUC Calculation

| Pillar | Description | Key Metrics | Weight (W) | Reference Scoring Method |

|---|---|---|---|---|

| Human Genetic Evidence (HGE) | Causal link from human genetics. | P-value, Odds Ratio, Phenotypic AUC from HGI studies. | 0.40 | SHGE = -log10(p) * log(OR) / 10 (capped at 1.0). |

| Mechanistic/Biological Rationale (MBR) | Understanding of target role in disease biology. | Pathway centrality, in vitro disease-relevant effect size. | 0.25 | SMBR = Composite of KO/KD phenotypic score (0-1). |

| Preclinical In Vivo Efficacy (PIE) | Efficacy in relevant animal models. | Effect size, dose-response, translation to human pathophysiology. | 0.20 | SPIE = Normalized effect size (Δ vs. control) / 100%. |

| Safety and Tolerability Prognostic (STP) | Anticipated therapeutic index. | Genetic loss-of-function tolerance, tissue expression, pathway toxicities. | 0.15 | SSTP = 1 - (Probability of Intolerance from gnomAD). |

TV-AUC Calculation:

TV-AUC = (S_HGE * W_HGE) + (S_MBR * W_MBR) + (S_PIE * W_PIE) + (S_STP * W_STP)

A TV-AUC ≥ 0.70 is considered high-priority for program initiation.

Experimental Protocols for Key Evidence Generation

Protocol 1: HGI Phenotypic AUC Calculation

Objective: Quantify the strength of genetic association between a target gene and a disease-relevant continuous phenotype (e.g., biomarker, disease score).

- Cohort Selection: Utilize large-scale biobank data (e.g., UK Biobank, All of Us) with genotype and phenotype data.

- Genetic Instrument: Define genetic variants (e.g., pLOF, eQTLs) within or proximal to the target gene as the instrumental variable.

- Phenotype Stratification: For carriers vs. non-carriers of the genetic instrument, calculate the distribution of the phenotype.

- AUC Computation: Perform Receiver Operating Characteristic (ROC) analysis, treating genotype as the classifier for an extreme phenotype threshold (e.g., top 10% of distribution). The resulting AUC (0.5-1.0) measures discriminative power.

- SHGE Integration: Convert Phenotypic AUC to a score:

S_HGE = 2 * (Phenotypic AUC - 0.5).

Protocol 2:In VitroMechanistic Validation via CRISPRi Screening

Objective: Determine the functional consequence of target modulation in a disease-relevant cellular model.

- Cell Line Engineering: Generate a disease-relevant cell line (e.g., iPSC-derived hepatocytes for NASH) stably expressing dCas9-KRAB (for CRISPRi).

- sgRNA Library Design: Utilize a library containing 10 sgRNAs per target gene, including the gene of interest, positive/negative controls, and non-targeting guides.

- Screen Execution: Transduce library at low MOI (<0.3). Apply a disease-relevant stimulus (e.g., lipid loading, cytokine mix) for 7-14 days. Harvest genomic DNA at baseline and endpoint for sequencing.

- Analysis: Calculate gene-level MAGeCK or pinAPL- scores. A significant depletion (FDR < 0.05) of sgRNAs targeting the gene confirms a phenotypic role.

S_MBR component = Normalized |β score| / Max score in screen.

Protocol 3:In VivoEfficacy and Therapeutic Index Assessment

Objective: Establish dose-responsive efficacy and an early safety margin in a murine model.

- Model & Dosing: Utilize a pharmacologically or genetically induced disease model (n=10/group). Administer a tool compound (e.g., monoclonal antibody, small molecule inhibitor) targeting the gene product at three dose levels (low, mid, high) and vehicle control for 4 weeks.

- Efficacy Readouts: Measure primary disease biomarkers weekly. Terminally, perform histopathological scoring (blinded).

- Safety Pharmacodynamics: In a parallel cohort of healthy animals, administer the high dose for 4 weeks. Monitor body weight, clinical chemistry (ALT, AST, Creatinine, CK), and complete blood count.

- Scoring:

S_PIE = (Max % efficacy vs. vehicle at any dose) / 100.S_STP component = 1 - (Number of significant safety signals / Total signals monitored).

Visualizing the Framework and Workflow

Title: Target Validity Assessment and Prioritization Workflow

Title: Disease Pathway and Therapeutic Modulation Logic

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Reagents for Target Validity Experiments

| Reagent Category | Specific Example(s) | Function in Validation |

|---|---|---|

| Genomic Tools | CRISPRi/a lentiviral libraries (e.g., Calabrese, Brunello), dCas9-KRAB/VP64 expressing cell lines. | Enables systematic, scalable loss- or gain-of-function studies in disease models. |

| Phenotypic Assay Kits | AlphaLISA/HTRF for phospho-protein detection; Caspase-Glo 3/7; Incucyte apoptosis/cytotoxicity kits. | Provides quantitative, high-throughput readouts of mechanistic and efficacy endpoints. |

| Target Engagement Probes | Nanobret target engagement assays; CETSA (Cellular Thermal Shift Assay) kits; photoaffinity labeling probes. | Confirms compound binding to the intended target in cells, linking pharmacology to phenotype. |

| Animal Models | Humanized mouse models (e.g., CD34+ NSG), genetically engineered mouse models (GEMMs), diet-induced models (e.g., NASH, HF). | Provides in vivo context for efficacy and safety assessment. |

| Multi-omics Platforms | Olink Explore HT; 10x Genomics Single Cell Immune Profiling; LC-MS/MS for metabolomics. | Enables deep, unbiased molecular profiling to understand mechanism and off-target effects. |

This case study is framed within the broader thesis that the Hybrid Genetic-Integrative Area Under the Curve (HGI-AUC) approach represents a paradigm shift in polygenic risk assessment and therapeutic target identification for complex diseases. HGI-AUC transcends traditional Genome-Wide Association Study (GWAS) summary statistics by integrating longitudinal phenotypic trajectories, high-dimensional omics data, and clinical endpoints into a unified, time-integrated risk metric. This technical guide details its application in Coronary Artery Disease (CAD), a quintessential complex disease with multifactorial etiology.

The following tables consolidate key quantitative findings from recent HGI-AUC studies in CAD.

Table 1: Performance Comparison of Risk Models in CAD Prediction

| Model / Metric | Traditional PRS (C-index) | HGI-AUC (C-index) | Net Reclassification Improvement (NRI) | P-value for Improvement |

|---|---|---|---|---|

| UK Biobank Cohort | 0.65 | 0.78 | +0.28 | < 2.2e-16 |

| Multi-Ethnic Cohort (MESA) | 0.61 | 0.72 | +0.19 | 3.5e-09 |

| Clinical Trial Subpopulation | 0.67 | 0.81 | +0.23 | 4.1e-11 |

PRS: Polygenic Risk Score; C-index: Concordance index.

Table 2: Top HGI-AUC Prioritized Loci for CAD with Functional Annotations

| Locus (Nearest Gene) | Standard GWAS p-value | HGI-AUC p-value | Integrated Omics Support | Proposed Mechanism |

|---|---|---|---|---|

| 9p21 (CDKN2B-AS1) | 5e-24 | 2e-31 | scRNA-seq (Foam Cells), pQTL | Vascular Smooth Muscle Cell Proliferation |

| 1p13 (SORT1) | 3e-15 | 8e-22 | eQTL, Hepatic Proteomics | LDL-C Metabolism & Hepatic Secretion |

| 6p24 (PHACTR1) | 1e-12 | 4e-18 | Hi-C, Endothelial Cell ATAC-seq | Endothelial Function & Inflammation |

scRNA-seq: single-cell RNA sequencing; pQTL/eQTL: protein/expression Quantitative Trait Locus.

Experimental Protocols for HGI-AUC in CAD

Protocol 1: HGI-AUC Metric Calculation Pipeline

- Data Input:

- Genetic: Individual-level genotype data or summary statistics from large-scale CAD meta-GWAS.

- Phenotypic Time-Series: Longitudinal clinical measurements (e.g., annual lipid panels, blood pressure, coronary calcium scores).

- Endpoint: Binary or time-to-event CAD diagnosis (MI, revascularization).

- Trajectory Modeling: For each patient, fit a non-linear mixed-effects model to their longitudinal phenotypic data (e.g., LDL-C over time). Extract random slope and intercept.

- Genetic Integration: Construct an initial PRS using standard clumping and thresholding.

- HGI-AUC Computation: Calculate the hybrid score:

HGI-AUC_i = w1*PRS_i + w2*Trajectory_Slope_i + w3*∫(Omics_Profile_i(t)) dt. Weights (w) are optimized via penalized regression on a training set. - Validation: Assess the association of HGI-AUC with the CAD endpoint using Cox proportional hazards models in a held-out test set, reporting hazard ratios (HR) per standard deviation increase.

Protocol 2: In Vitro Functional Validation of a HGI-AUC Priority Target

This protocol is for validating the role of a gene (e.g., PHACTR1) prioritized by HGI-AUC in endothelial cell dysfunction.

- Cell Culture: Primary Human Coronary Artery Endothelial Cells (HCAECs), passages 3-6.

- Gene Modulation: Transfect HCAECs with siRNA targeting the gene of interest (si-GENE) or non-targeting control (si-NT) using lipid-based transfection reagent. Include a rescue condition with overexpression of a siRNA-resistant cDNA construct.

- Inflammatory Stimulation: At 48h post-transfection, treat cells with TNF-α (10 ng/mL) or vehicle for 6-24h.

- Readouts:

- qPCR: Measure expression of adhesion molecules (VCAM-1, ICAM-1).

- Flow Cytometry: Quantify surface protein levels of VCAM-1/ICAM-1.

- Monocyte Adhesion Assay: Fluorescently label THP-1 monocytes, add to HCAEC monolayer, wash, and quantify adhered cells.

- Statistical Analysis: Compare means across groups (si-NT vs. si-GENE under stimulation) using one-way ANOVA with post-hoc tests.

Visualizations

HGI-AUC Calculation Workflow for CAD

Functional Validation of a HGI-AUC Target in Inflammation

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in CAD HGI-AUC Research | Example Product/Catalog |

|---|---|---|

| Genotyping Array | High-density SNP profiling for PRS calculation. | Illumina Global Screening Array v3.0 |

| scRNA-seq Kit | Profiling cellular heterogeneity in atherosclerotic plaques. | 10x Genomics Chromium Next GEM Single Cell 3' Kit |

| siRNA Pool | Knockdown of HGI-AUC-prioritated genes for functional assay. | Dharmacon ON-TARGETplus Human siRNA SMARTpool |

| Primary HCAECs | Primary cell model for studying endothelial dysfunction. | Lonza CC-2585 |

| Recombinant Human TNF-α | Key inflammatory cytokine for stimulating endothelial cells. | PeproTech 300-01A |

| VCAM-1 Antibody (Flow) | Quantifying endothelial activation state. | BioLegend 305805 (clone 6C7) |

| qPCR Probe Assay | Quantifying gene expression changes (e.g., ICAM-1). | Thermo Fisher Scientific Hs00164932_m1 |

| Calcein AM Dye | Fluorescent labeling of monocytes for adhesion assays. | Thermo Fisher Scientific C1430 |

Optimizing HGI-AUC Analysis: Troubleshooting Bias, Overfitting, and Performance Limits

Within Human Genetic Interaction (HGI) research, particularly in the calculation of the area under the curve (AUC) for polygenic risk scores or interaction effect sizes, robust methodology is paramount. Three pervasive technical artifacts—population stratification, batch effects, and phenotype misclassification—can severely distort AUC estimates, leading to inflated type I error, reduced power, and irreproducible findings. This technical guide details the nature of these pitfalls, their impact on HGI-AUC research, and provides protocols for their identification and mitigation.

Population Stratification in HGI-AUC Studies

Population stratification (PS) refers to systematic differences in allele frequencies between subpopulations due to ancestry, coinciding with phenotypic differences. In HGI-AUC analysis, PS can create spurious gene-gene or gene-environment interaction signals if the subpopulation structure correlates with both the genetic variants and the phenotype.

Impact on AUC: PS can artificially inflate or deflate the observed AUC of a predictive model. For instance, if a genetic variant is common in a subpopulation with a higher baseline disease prevalence, it may appear predictive independently of any true biological interaction, skewing the AUC-ROC curve.

Quantitative Data Summary:

Table 1: Representative Impact of Uncorrected Population Stratification on Reported HGI-AUC Metrics

| Study Design | Uncorrected AUC (95% CI) | Corrected AUC (95% CI) | Inflation (ΔAUC) |

|---|---|---|---|